Information | Free Full-Text | Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence

GPU parallel computing for machine learning in Python: how to build a parallel computer , Takefuji, Yoshiyasu, eBook - Amazon.com

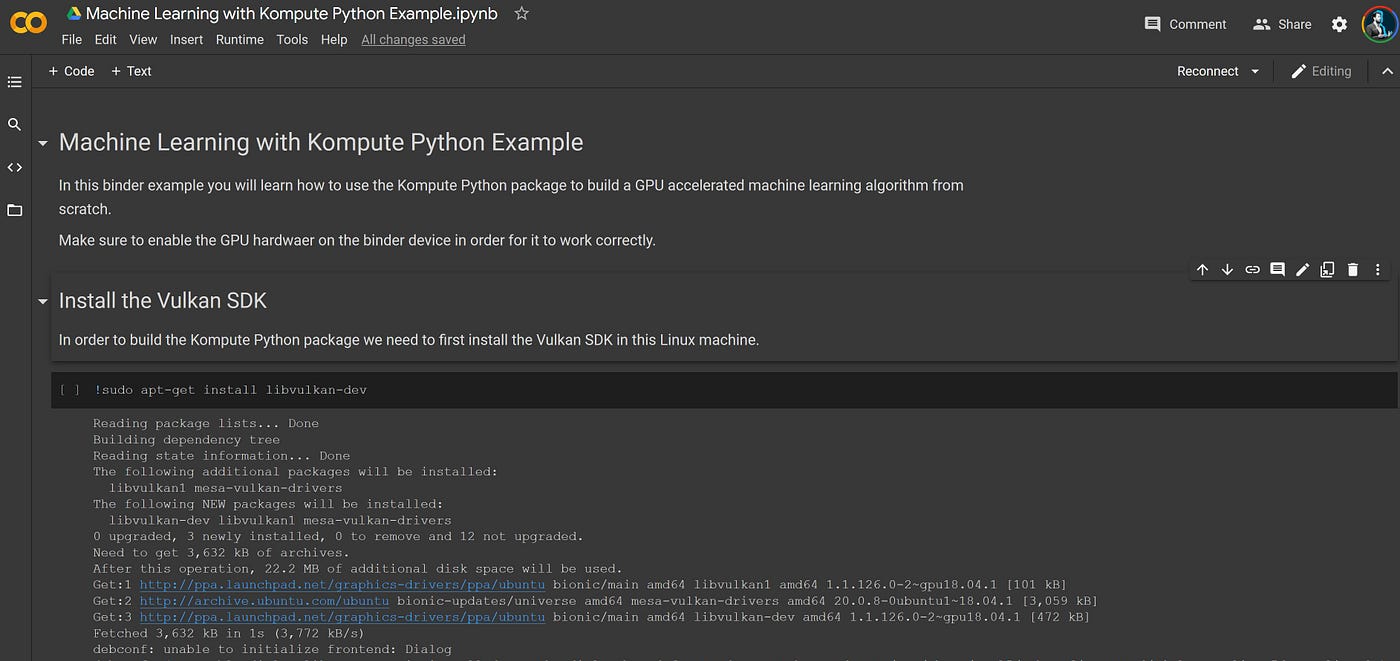

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

Best Python Libraries for Machine Learning and Deep Learning | by Claire D. Costa | Towards Data Science

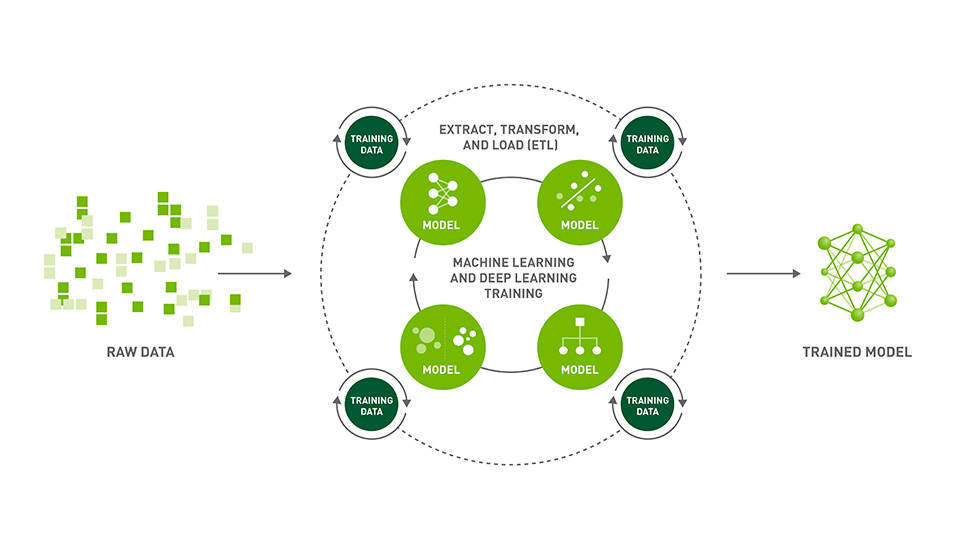

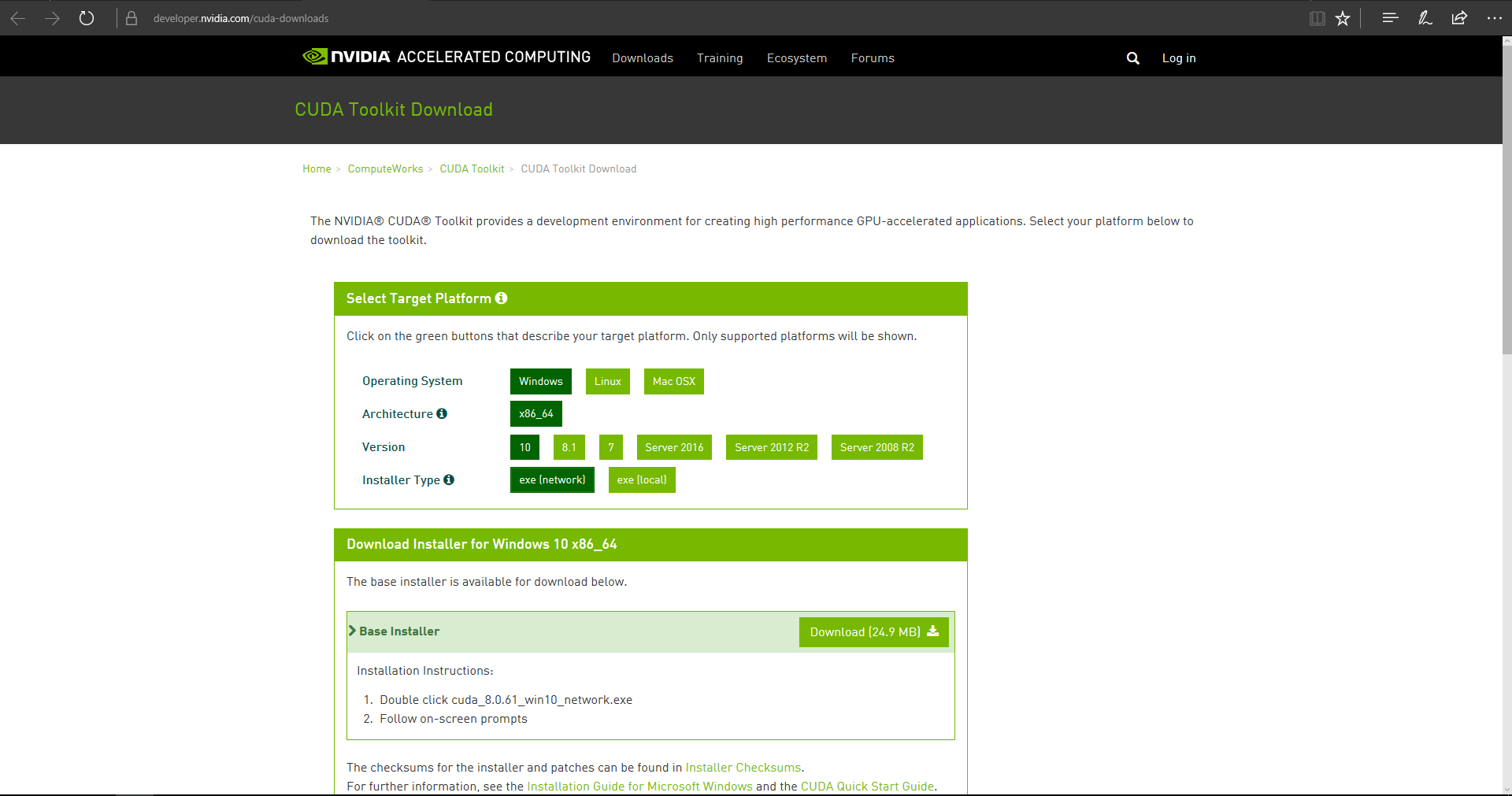

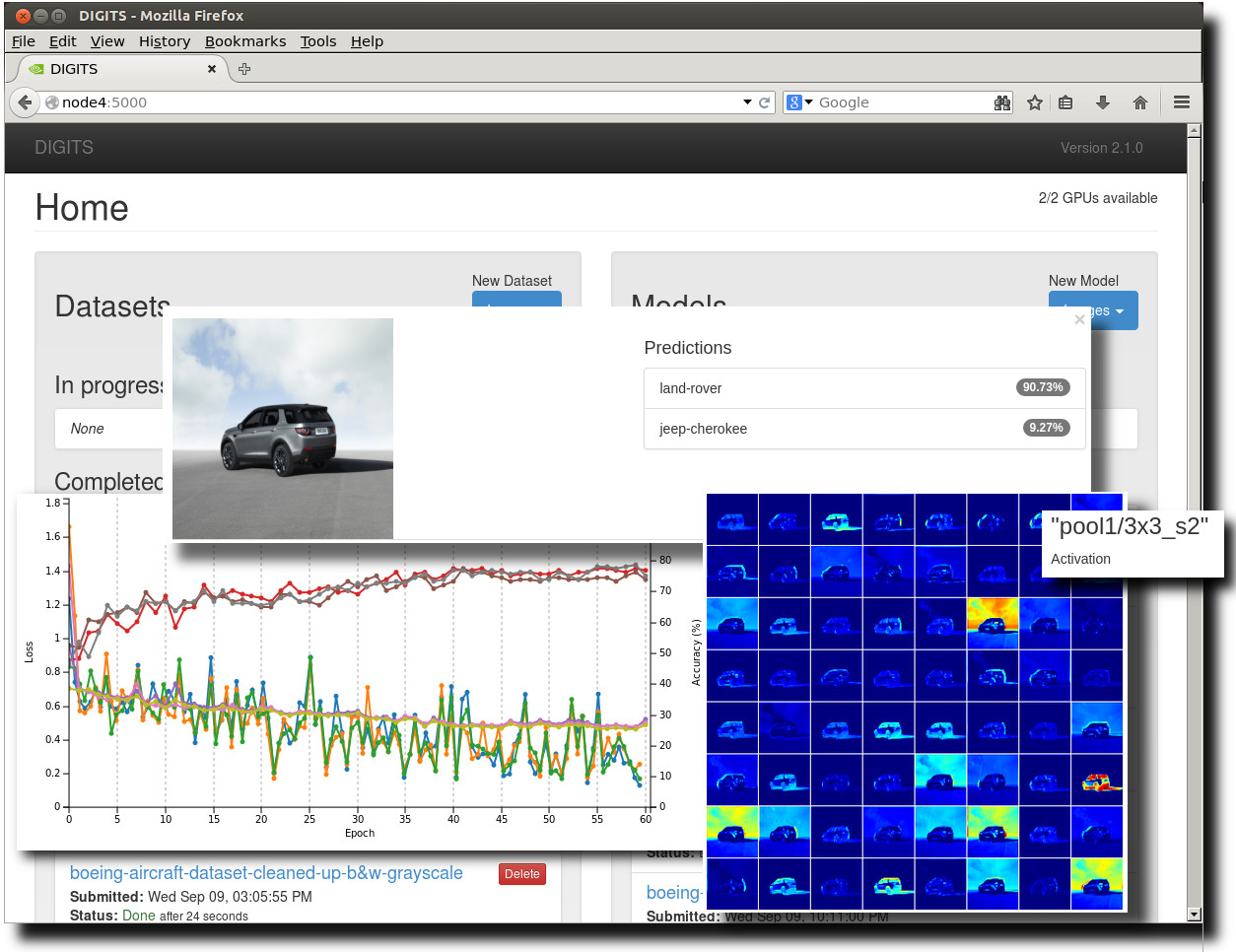

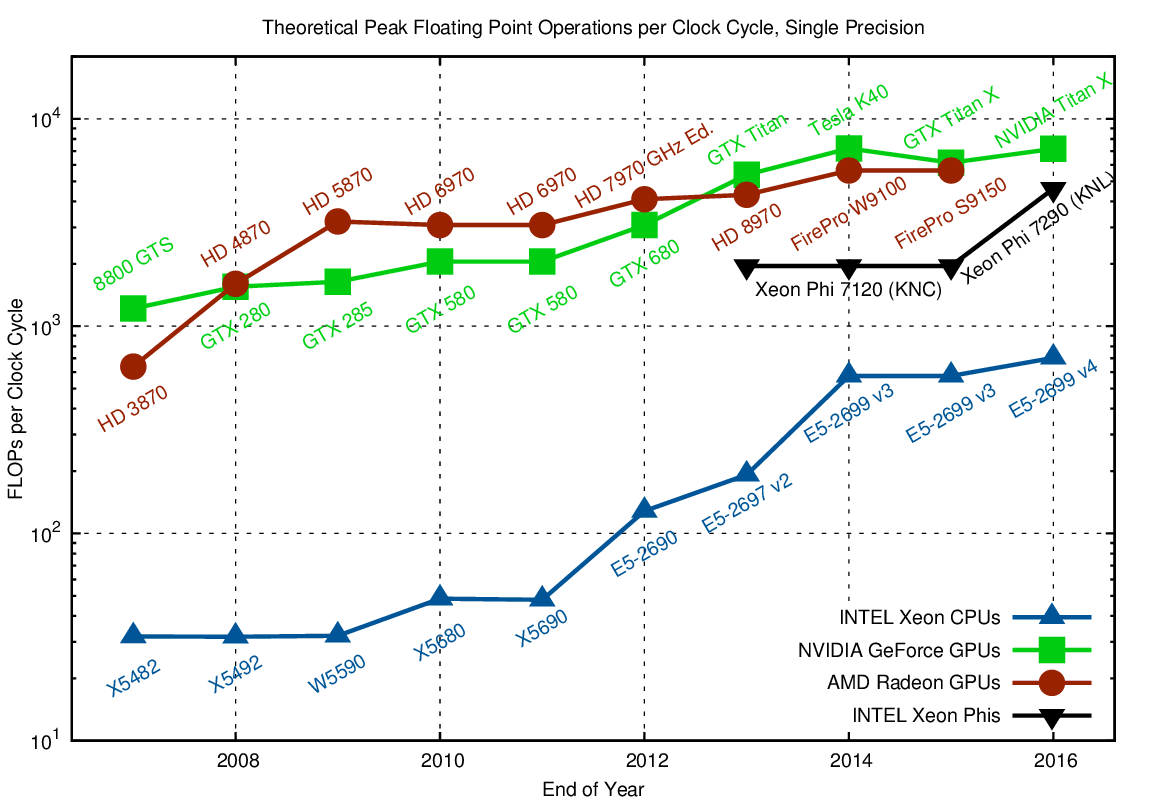

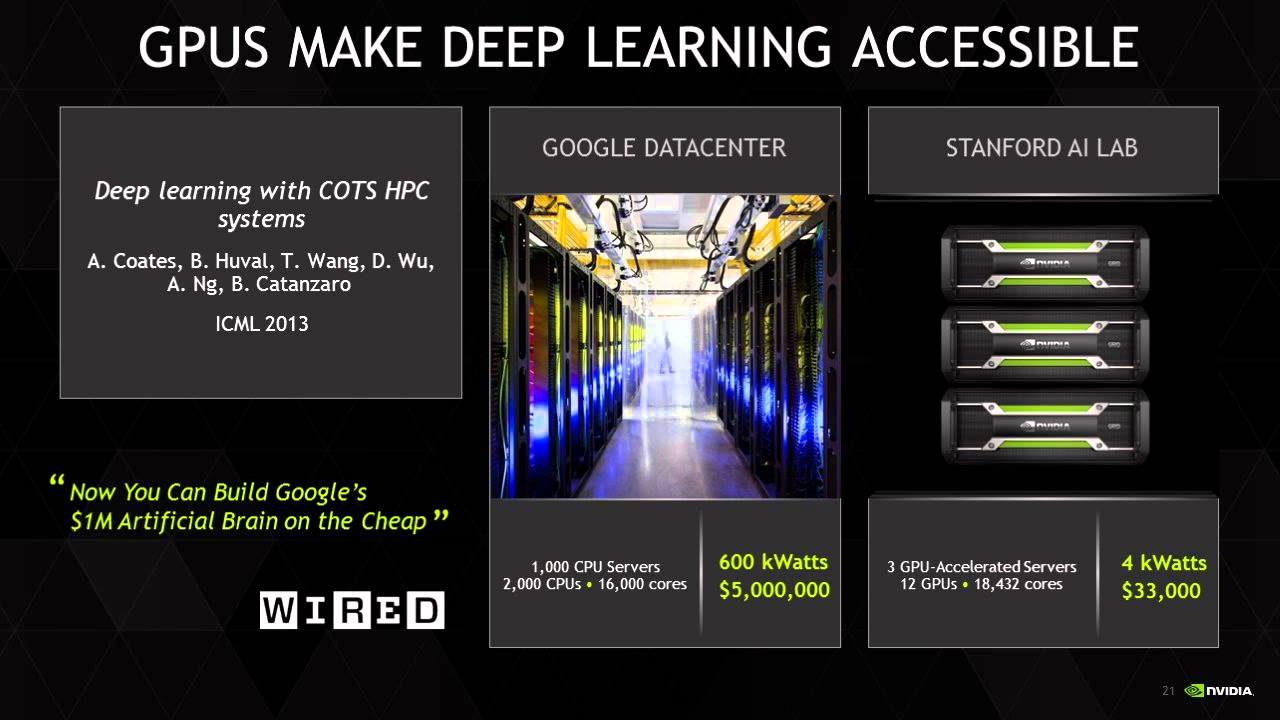

A Complete Introduction to GPU Programming With Practical Examples in CUDA and Python - Cherry Servers

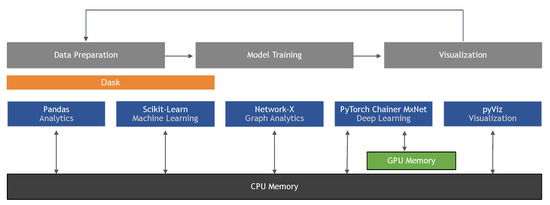

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

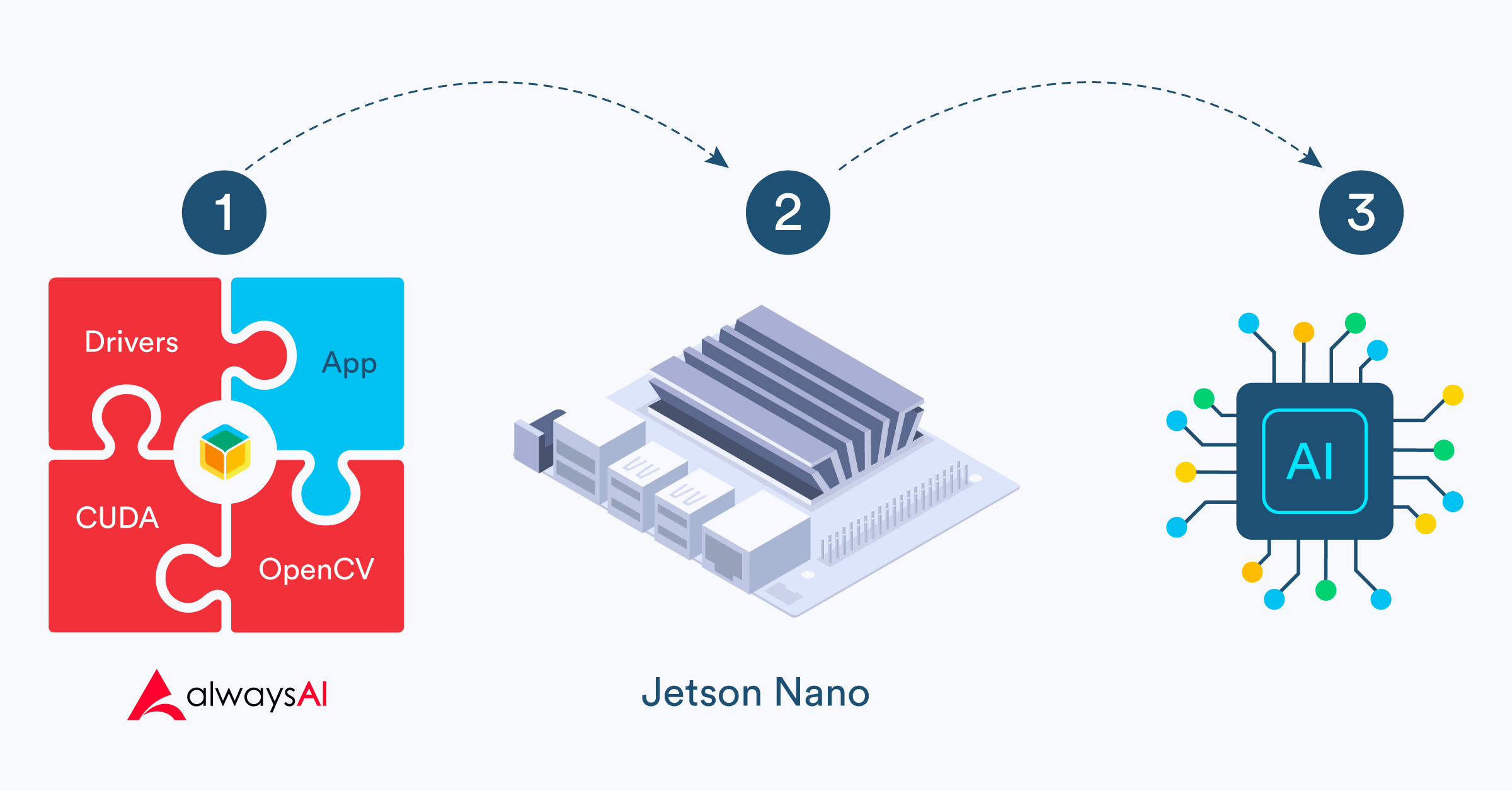

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

RAPIDS is an open source effort to support and grow the ecosystem of... | Download Scientific Diagram

H2O.ai Releases H2O4GPU, the Fastest Collection of GPU Algorithms on the Market, to Expedite Machine Learning in Python | H2O.ai