2023 2022 Deep Learning GPU Server, AI Starting at $9,990 Dual AMD EPYC 32, 64, 128 cores, 192 cores, 8 GPU NVIDIA RTX 6000 Ada, A5000, A6000, A100, H100, Quadro RTX. In Stock. Customize and buy now

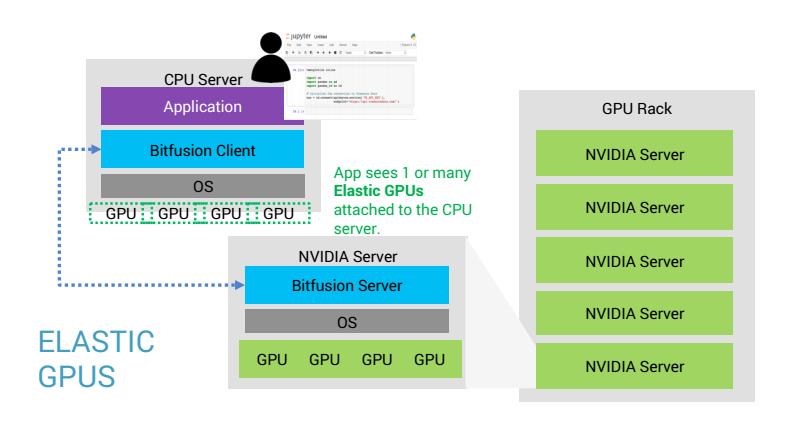

Performance Comparison of Containerized Machine Learning Applications Running Natively with Nvidia vGPUs vs. in a VM – Episode 4 - VROOM! Performance Blog

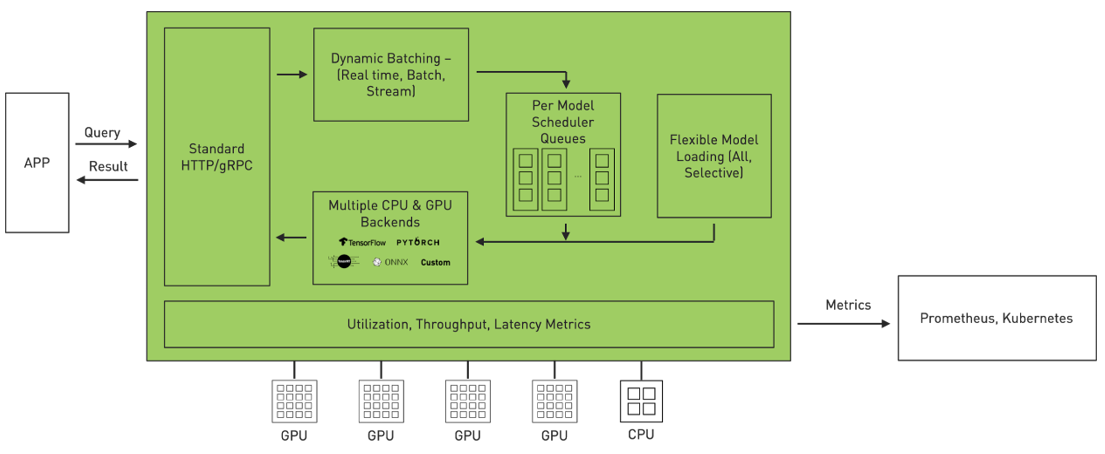

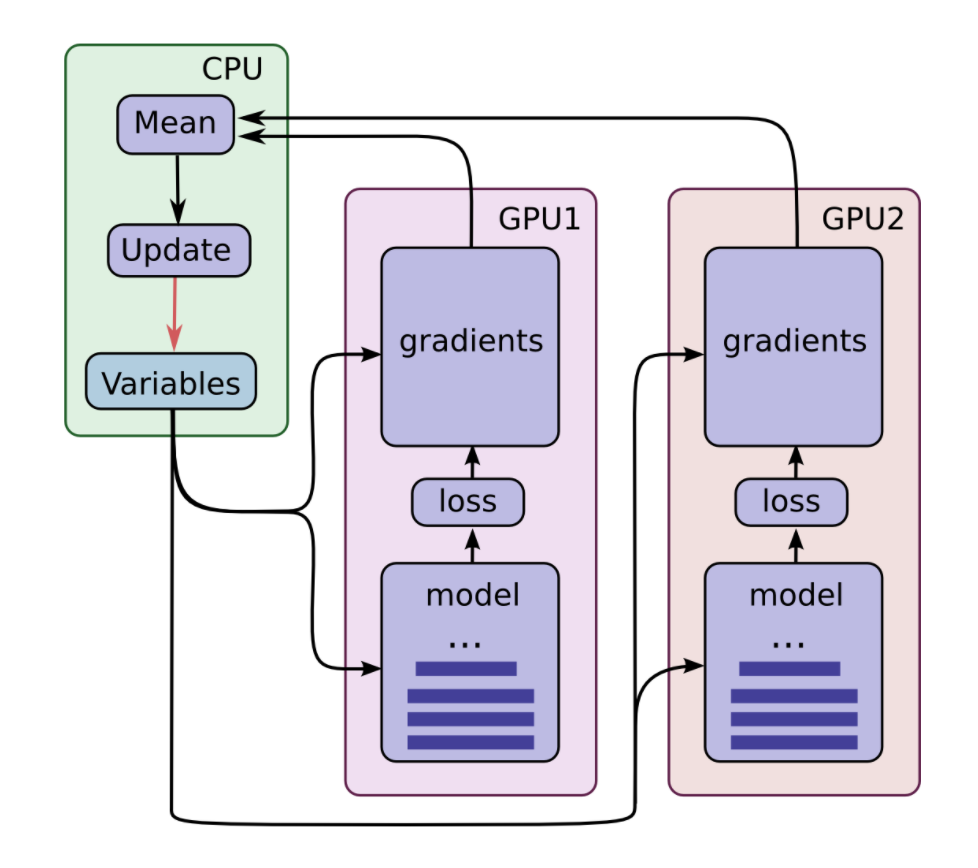

Deploying a PyTorch model with Triton Inference Server in 5 minutes | by Zabir Al Nazi Nabil | Medium

Deploy fast and scalable AI with NVIDIA Triton Inference Server in Amazon SageMaker | AWS Machine Learning Blog